HTTP Live Streaming in the Metaverse?

Sylwester Mielniczuk

Web Developer Advocate

Editor’s note: Welcome to Sylwester who has recently joined our team to work on Web apps (including WebXR apps) as part of the 5G Tours project — Daniel Appelquist

This article is for every immersive web developer who uses Web XR API with javascript libraries like ThreeJS and needs to provide video content in a better and sustainable way to open Extended Realities (VR/AR/MR).

We will use also: HLS.js library, Unix Shell Terminal (Bash) with installed FFMPEG, and HTTPD server (Nginx) + intermediate front end development stack knowledge.

Web XR Device API: is for accessing virtual reality (VR) and augmented reality (AR) devices, including sensors and head-mounted displays.

Background

While I was recently involved in the EU Horizon project “5G Tours” (Touristic city, safe city, and mobility-efficient city use cases) we were finishing several use cases, testing and experimenting across prototyped XR experiences. During a user journey, we will provide several footages (short as well as lengthy) with additional interesting information and guidelines. Depending on the 5G network service layering and slicing architecture such video broadcasting might potentially provide very heavy and expensive payload. The experience should be smooth and video quality modified automatically without additional user interaction and the use of 5G network fully justified.

The most efficient way of streaming relatively high-quality video on demand from the server provides access to the time targeted chunks of compressed data (where the context is defined by the playlist file) instead of loading and preloading a huge single file. (For example, the original 1080p file is 500MB.)

HLS or HTTP Live Streaming is smart. It fulfills one of our fundamental requirements: with some extra preparation, it can smoothly change the stream bitrate concerning the connection quality and not overuse mobile devices by draining from the battery life.

History: HTTP Live Streaming (HLS) was Apple’s answer to Flash. Streaming video in the past typically meant having to rely on proprietary protocols and special server software. To stream video with HTTP Live Streaming, all you need is a web server. HLS is supported by other platforms such as Roku — the streaming media player that connects to your TV — and Android, to name a few. [https://hlsbook.net/]

Let’s start coding

I have found a Javascript HLS library that can work with our WebXR projects. https://github.com/video-dev/hls.js NPM: https://www.npmjs.com/package/hls.js/v/canary

HLS.js is: a JavaScript library that implements an HTTP Live Streaming client. It relies on HTML5 video and MediaSource Extensions for playback.

It works by transmuxing MPEG-2 Transport Stream and AAC/MP3 streams into ISO BMFF (MP4) fragments. Transmuxing is performed asynchronously using a Web Worker when available in the browser. HLS.js also supports HLS + fragmented MP4.

HLS.js works directly on top of a standard HTML*<video>*element. […]

Using the Swiss Army knife for media files manipulation, open source FFMPEG, we can generate the desired HLS static setup. Below privided an example shell script (which in this case also slightly reduces video bitrate and resolution of the original video).

#!/bin/sh

ffmpeg -y \

-i myrtis_1080_eng.mp4 \

-force_key_frames "expr:gte(t,n_forced*2)" \

-sc_threshold 0 \

-s 1280x720 \

-c:v libx264 -b:v 1500k \

-c:a copy \

-hls_time 6 \

-hls_playlist_type vod \

-hls_segment_type fmp4 \

-hls_segment_filename "fileSequence%d.m4s" \

prog_index.m3u8

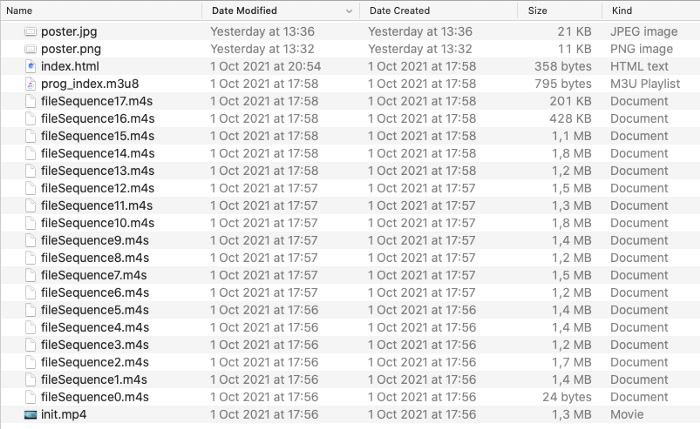

It has produced about 20 files: 22MB from the original 500MB.

To quickly test if this fragmented content (playlist: m3u8, fragmented mp4s: .m4s) works I have created a index.html linking to the Video.js library (which provides HTML5 HLS video DOM player) and uploaded all together to the server:

HLS non-XR player

https://xr.workwork.fun/samsung/quiz/quiz1/media/

<!DOCTYPE html>

<html>

<head><link href="https://vjs.zencdn.net/7.4.1/video-js.css" rel="stylesheet"></head><body><video class="video-js" width="1280" height="720" data-setup='{}' controls><source src="prog_index.m3u8" type="application/x-mpegURL"></video>

<script src='https://vjs.zencdn.net/7.4.1/video.js'></script>

</body>

</html>

The snippet above shows smoothly streaming video content in a DOM player!

Interesting hint: HLS also supports byte-range addressing. To use this feature with fMP4, set the -hls_flags option to single_file. Instead of creating several (smaller) files, it will create a single file containing all the media segments. If you look at the playlist, the location of each segment is specified as a byte offset in the file. [https://hlsbook.net/hls-fragmented-mp4/]

The next step is to intercept this video stream as a video texture on the Plane Geometry (we called “tvset”) as a part of the ThreeJS XR project.

/* VIDEO SOURCE */

let videoSrc = "[https://xr.workwork.fun/samsung/quiz/quizvideo/assets/media/prog_index.m3u8](https://xr.workwork.fun/samsung/quiz/quizvideo/assets/media/prog_index.m3u8)";

video.src = videoSrc;

/* VIDEO TEXTURE */

let videoTexture = new THREE.VideoTexture(video);

videoTexture.minFilter = THREE.LinearFilter

videoTexture.magFilter = THREE.LinearFilter

videoTexture.format = THREE.RGBFormat

videoTexture.crossOrigin = "anonymous"

let movieMaterial = new THREE.MeshBasicMaterial( { map: videoTexture, overdraw: true, side:THREE.DoubleSide } );

/* PLANE GEOMETRY FOR VIDEO TEXTURE */

let movieMaterial = new THREE.MeshBasicMaterial(

{ color:0x000000, overdraw: true, side:THREE.DoubleSide });

let movieGeometry = new THREE.PlaneGeometry( 1.78*2, 1*2, 4, 4 );

let tvset = new THREE.Mesh( movieGeometry, movieMaterial );

tvset.material = movieMaterial;

scene.add(tvset);

I’ve been familiar with this technology for a few years but have never done any HLS experiments in WebXR.

One of my early WebXR experiments involved RTMP Live Stream mobile apps. From multiple mobile devices restreaming via NGINX on Linux server I was able to CCTV myself. :)

It is required a set up of NGINX — extra module and modification of nginx.conf. Here are some good instructions on how to do it on Ubuntu — highly recommended.

In our case we have already produced the playlist and fragmented mp4s so it does not require any extra sys admin skills. In the ThreeJS project we need to detect if HLS is supported and then to load and attach media from the video DOM element. Thanks to attached HLS listener event MANIFEST_PARSED we assume that video is ready to play.

/* DETECTING IF HLS IS SUPPORTED */

if (Hls.isSupported()) {

let hls = new Hls();

hls.loadSource(videoSrc);

hls.attachMedia(video);

/* PLAYING */

hls.on(Hls.Events.MANIFEST_PARSED, () => video.play())

}

A video tag has to be present as part of DOM — we hide it as the only required visible part is WebGL/XR canvas. We will redirect video stream as a video texture. Now we can play/pause and attach HTML5 video events having it in XR.

<video id="video" style="display: none"></video>

WebXR Demo: https://xr.workwork.fun/samsung/quiz/quizvideo2/ (requires an XR enabled browser like Samsung Internet).

Please check if your platform of choice supports this standard, it might be behind the flag (check Settings — Virtual Reality Permissions) or works only with XR extension.

Demo Video: https://youtu.be/6kzkjQ8D1-0

For more details please familiarize with HLS API: https://github.com/video-dev/hls.js/blob/master/docs/API.md and for more info on Live Streaming, visit the MDN page: https://developer.mozilla.org/en-US/docs/Web/Guide/Audio_and_video_delivery/Live_streaming_web_audio_and_video

What others say and interesting video streaming references:

HTTP Live Streaming (HLS) is the current ‘de facto’ streaming standard on the web. (https://api.video/blog/video-trends/what-is-hls-video-streaming-and-how-does-it-work)

State of the Web: Video Playback Metrics:

https://dougsillars.com/2019/09/16/state-of-the-web-video-playback-metrics/

Stream or Not: