Accelerate Your Neural Network with the Samsung Neural SDK

Samsung Neural Team

NOTE: This article assumes that you have prior knowledge about machine learning. If you have any questions, please post them in the Samsung Neural forum.

The development of machine learning has revolutionized the technology industry by bringing human-like decision making to compact devices. From health care to real estate, finance, and computer vision, machine learning has penetrated almost every field. Today, many businesses deploy machine learning to gain a competitive edge for their products and services. One of the fastest-growing machine learning areas is Deep Neural Networks (DNN), also known as Artificial Intelligence (AI), which is inspired by the neural interactions in the human brain.

With the AI industry growing so quickly, it is not only difficult to be up-to-date with the latest innovations, but even more so to deploy those developments in your business or application. As AI technology paves its way into the mobile industry, one wonders:

- What can be achieved with the limited capacity of mobile embedded devices?

- How does one execute DNN models on mobile devices, and what are the implications of running a computationally intensive model on a low resource device?

- How does it affect the user experience?

Typically, a deep neural network is developed on a resource-rich GPU farm or server, where it is designed and then trained with a specific data set. This pre-trained DNN model is then ready to be deployed in an environment, such as a mobile device, to generate output. A pre-trained DNN model can easily be used to develop an AI-based application that brings completely unique user experiences to mobile devices. A variety of pre-trained models, such as Inception, Resnet, and Mobilenet are available in the open source community.

The Samsung Neural SDK is Samsung’s in-house inference engine which efficiently executes a pre-trained DNN model on Samsung mobile devices. It is a one-stop solution for all application and DNN model developers who want to develop AI-based applications for Samsung mobile devices.

To simplify the process of deploying applications that exploit neural network technology, the Samsung Neural SDK supports the leading DNN model formats, such as Caffe, Tensorflow, TFLite, and ONNX, while enabling you to select between the available compute units on the device, such as the CPU, GPU, or AI Processor.1

The Samsung Neural SDK enables easy, efficient and secure execution of pre-trained DNN models on Samsung mobile devices, irrespective of the constraints posed by hardware such as compute unit capability, memory configuration and power limitations.

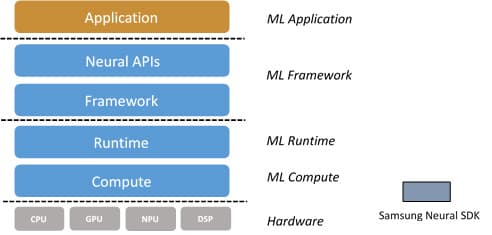

Samsung Neural Stack

Features

The Samsung Neural SDK provides simple APIs that enable you to easily deploy on-device pre-trained or custom neural networks. The SDK is designed to accelerate the machine learning models in order to improve performance and optimize hardware utilization, balancing performance and latency with memory use and power consumption.

The Samsung Neural SDK supports mixed precision formats (FP32/FP16 and int8), and provides a great variety of operations that enable you to experiment with different models and architectures to find what works best for your use case. It also employs industry-standard cryptographic encryption methods for neural network models, to protect your intellectual property.

The Samsung Neural SDK includes complete API documentation for your ready reference. It describes all the optimization tools and supported operations, provides code examples, and more. Sample benchmarking code included with the Samsung Neural SDK The accompanying sample benchmarking code helps you understand how to use the API methods and demonstrates the available features and configurations, such as selecting a compute unit and execution data type.

The Samsung Neural SDK can be used in a wide range of applications that utilize Deep Neural Networks and improves their performance on Samsung mobile devices. It has already been applied to many use cases and we look forward to supporting your application idea.

Are you interested in using Samsung Neural SDK? Visit Samsung Neural SDK to learn more about becoming a partner today. Partners gain access to the SDK and technical content such as developer tips and sample code.

If you have questions about the Samsung Neural SDK, email us at sdk.neural@samsung.com.

[1] AI processors include neural processing units (NPU) and digital signal processors (DSP). The Samsung Neural SDK currently supports only the Caffe and Tensorflow formats.